A Comprehensive Guide on How to Build an MLOps Pipeline

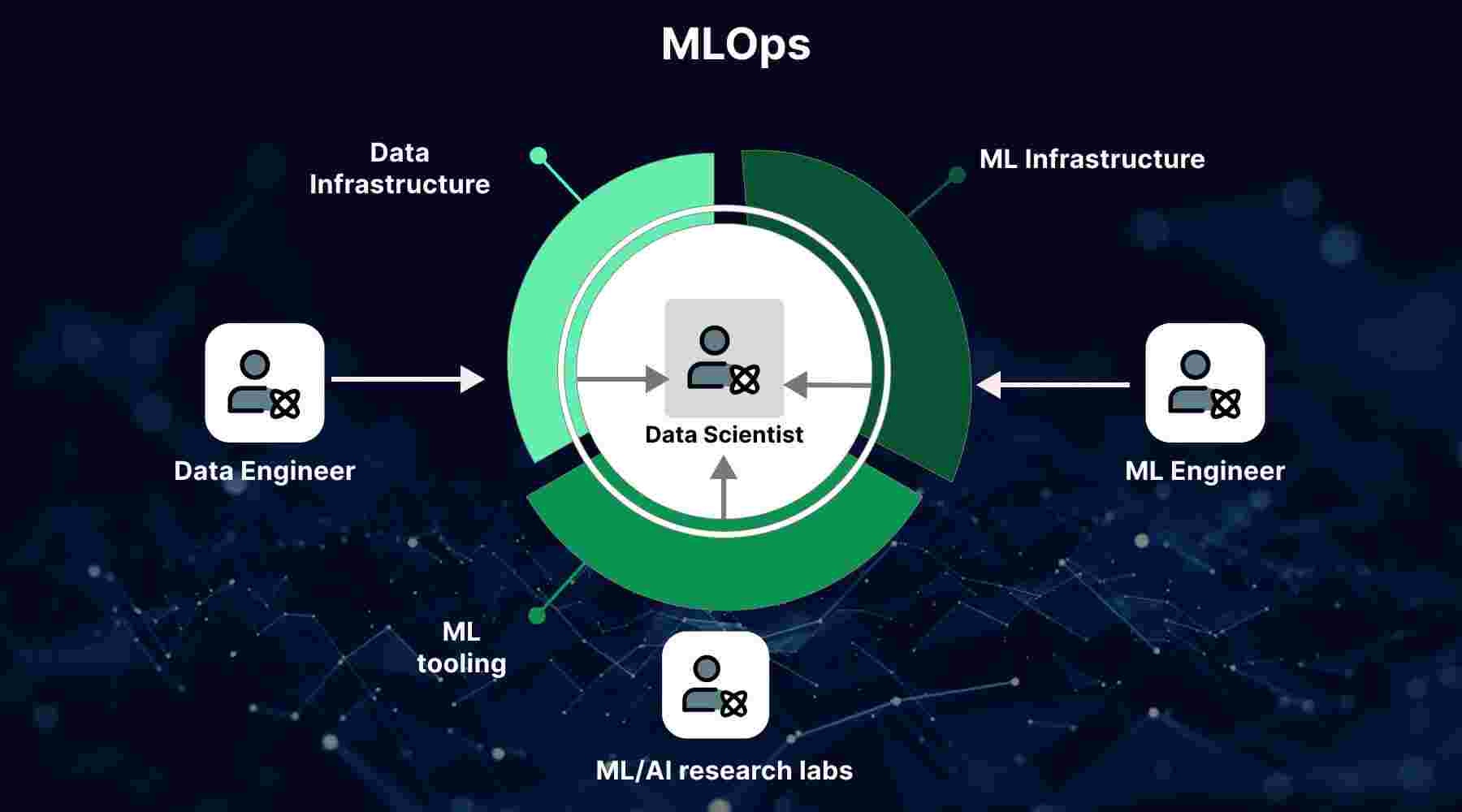

In recent years, the integration of machine learning operations (MLOps) has become essential for businesses that depend on machine learning and AI systems for strategic decision-making. Building a robust MLOps Pipeline streamlines machine learning workflows, ensuring consistency, scalability, and efficiency. This guide outlines the steps needed to construct a high-quality MLOps Pipeline, detailing the role of AI development companies, the application of Natural Language Processing (NLP), and the collaboration needed across various teams.

What is an MLOps Pipeline?

An MLOps Pipeline is a set of processes and tools that automates the development, deployment, and monitoring of machine learning models. It integrates practices from DevOps and adapts them for machine learning, enabling AI development companies and data teams to quickly deploy machine learning projects and maintain high-quality outcomes.

The Importance of MLOps Pipeline in AI Development

In the AI industry, an MLOps Pipeline enables faster production of AI models and ensures that models can scale to meet business demands. For any AI development company, implementing MLOps reduces bottlenecks by allowing data scientists and engineers to collaborate on model development, testing, and deployment in a streamlined fashion.

Steps to Build an MLOps Pipeline

Building an effective MLOps Pipeline involves several essential steps:

1. Data Collection and Preparation

Data is the foundation of any machine learning model, and ensuring high-quality data is the first critical step in the MLOps Pipeline. Here’s how to approach this stage:

- Collect Data: Identify sources and gather relevant datasets. This includes both historical and real-time data, depending on your project needs.

- Data Preprocessing: Cleaning, transforming, and normalizing the data to eliminate inaccuracies and biases.

- Data Splitting: Split the data into training, validation, and test sets for reliable model evaluation.

An AI development company often integrates data from various sources, ensuring a pipeline that enhances data quality and relevance.

2. Model Building and Experimentation

After data preparation, the next step involves building and experimenting with models. This stage allows data scientists to evaluate different algorithms and hyperparameters to select the best-performing models.

- Experimentation Tracking: Keeping track of various experiments to determine which combination of algorithms and hyperparameters works best. Tools like MLflow and Weights & Biases are often used.

- Hyperparameter Tuning: Adjust model parameters for optimal performance.

- Model Versioning: Maintain versions of models to simplify rollback and re-deployment if needed.

Experimentation tracking and versioning are essential as they help AI development companies maintain accountability and transparency throughout model development.

3. Model Training

Model training is one of the most resource-intensive stages in the MLOps Pipeline. The goal is to achieve a model that generalizes well to unseen data, using the data prepared in the initial stages.

- Distributed Training: For large datasets, distributed training is often employed to reduce training time.

- Resource Management: Allocating adequate resources like GPUs or TPUs, depending on the model complexity.

- Parallel Processing: Running multiple training processes in parallel for faster experimentation.

Through these practices, AI development companies can ensure that their models are trained efficiently without exhausting resources.

4. Model Evaluation and Validation

Evaluating and validating models ensures that they perform accurately on unseen data. This step involves using the test dataset for model assessment and refining it to address performance issues.

- Performance Metrics: Track metrics such as accuracy, precision, recall, and F1 score to ensure the model meets requirements.

- Cross-Validation: Perform cross-validation to verify the consistency of model performance.

- Error Analysis: Identify areas where the model performs poorly and iterate on them to improve.

This stage is critical for models involving Natural Language Processing (NLP), as performance metrics help AI development companies understand how well the model processes and understands language data.

5. Model Deployment

Once the model is trained and validated, it’s time to deploy it into a production environment where it can interact with real-world data. Effective model deployment includes:

- Containerization: Containerizing the model (using Docker, for instance) ensures consistency across environments.

- CI/CD Pipeline: Implement continuous integration and continuous deployment practices to automate the model deployment process.

- Scaling: Scale the deployment environment as needed, whether through cloud services or on-premise solutions.

With a well-established MLOps Pipeline, AI development companies can deploy models seamlessly, ensuring reliability in production.

6. Monitoring and Maintenance

Model monitoring is crucial to keep track of model performance over time. This is particularly important for dynamic data where patterns evolve, affecting the model’s accuracy.

- Performance Monitoring: Continuously monitor metrics such as latency, accuracy, and data drift.

- Retraining: When performance declines, trigger retraining processes to ensure the model’s performance remains reliable.

- Alerting: Set up alerts to notify teams of issues, enabling quick resolution.

By monitoring models, AI development companies ensure ongoing optimization of the MLOps Pipeline for performance and accuracy.

Role of Natural Language Processing (NLP) in MLOps Pipeline

Natural Language Processing (NLP) is a common application in MLOps, particularly for text-based tasks like sentiment analysis, chatbot creation, and language translation. When implementing an MLOps Pipeline for NLP models, additional preprocessing steps such as tokenization, stop-word removal, and text normalization are necessary.

Benefits of Building an MLOps Pipeline with AI Development Companies

Partnering with an experienced AI development company provides several benefits when building an MLOps Pipeline:

- Technical Expertise: Specialized knowledge in setting up pipelines, ensuring the smooth deployment of complex models.

- Resource Efficiency: Access to scalable cloud resources for model training, experimentation, and deployment.

- Reduced Time-to-Market: Faster implementation and reduced time-to-market, allowing businesses to gain a competitive edge.

- Scalability: Ensures the pipeline can handle large volumes of data and complex models without degradation in performance.

Conclusion

Building an MLOps Pipeline is essential for any organization looking to optimize its AI and machine learning processes. By following the steps outlined—data preparation, model training, deployment, and monitoring—companies can establish a reliable pipeline that ensures the scalability and efficiency of their machine learning workflows. Partnering with a reputable AI development company can also streamline the process, offering expertise in Natural Language Processing (NLP), model deployment, and ongoing maintenance.

- Art

- Causes

- Crafts

- Dance

- Drinks

- Film

- Fitness

- Food

- Игры

- Gardening

- Health

- Главная

- Literature

- Music

- Networking

- Другое

- Party

- Religion

- Shopping

- Sports

- Theater

- Wellness